OpenAI Assistants are intelligent virtual agents designed to assist users across various tasks and domains. From simplifying complex inquiries to generating creative content and gaining insights, assistants are reshaping the way we approach productivity, creativity, and problem-solving.

OpenAI Assistants are almost like intelligent companions. They possess a remarkable ability to comprehend and generate human-like text, enabling seamless communication and interaction with users. Whether it’s answering questions about a hotel or providing suggested improvements, OpenAI Assistants demonstrate a level of intelligence and adaptability that is reassuringly human-like.

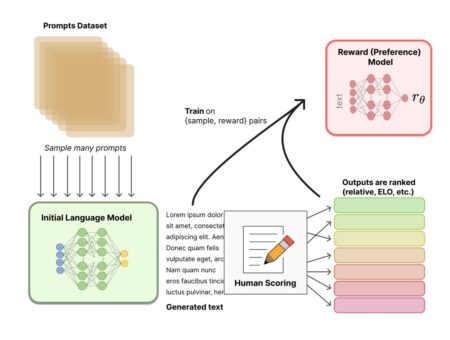

OpenAI Assistants revolve around transformer-based architectures trained on text corpora, enabling deep understanding and generation of human language. Through pre-training and fine-tuning, these models adapt to specific tasks or domains, utilizing attention mechanisms and large-scale training techniques to capture linguistic nuances efficiently. Challenges include scalability, model compression, domain adaptation, bias mitigation, and interpretability, all addressed through ongoing research and development efforts.

In the hospitality industry, guest reviews guide hotels toward success. By harnessing the capabilities of OpenAI Assistants to decipher these reviews, hoteliers can unlock actionable insights and implement targeted strategies to elevate every facet of the guest experience. From accommodation excellence to tailored experiences for various guest segments, the journey toward hotel excellence begins with listening, learning, and adapting based on guest feedback.

As industries like hospitality embrace the potential of OpenAI Assistants, it’s important to remember that these tools are not here to replace human expertise but to enhance it. The symbiotic relationship between OpenAI Assistants and human expertise promises to unlock new insights and elevate guests’ experiences, making each individual’s contribution invaluable.

What information from guest reviews would be insightful to know in order to improve your hotel in the following areas:

Accommodation:

– Room cleanliness

– Comfort of beds and amenities

– Noise levels

– Views

– Accessibility features

Service:

– Friendliness and helpfulness of staff

– Efficiency of check-in/check-out

– Responsiveness to requests

– Communication clarity

Facilities:

– Pool

– Spa

– Gym

– Restaurant

– Bar

– Common areas

– Parking

– Wi-Fi quality

Location:

– Convenience to local attractions

– Transportation options

– Surrounding atmosphere

Food and Beverage:

– Restaurant menu variety and quality

– Service in the restaurant and bar

– Special dietary options

– Room service

Comparative Feedback:

– How does your hotel compare to others in the area or similar price range?

Considerations Based on Type of Guests

Families:

– Kid-friendly amenities

– Childcare services

– Safety features

– Entertainment options

Business Travellers:

– Workspaces

– Reliable internet

– Meeting facilities

– Airport accessibility

Solo Travellers:

– Safe and comfortable single rooms

– Social events or activities

– Helpful recommendations for exploring the area

Assistants Architecture

Transformer Architecture:

The transformer architecture allows the model to weigh the importance of different words or tokens within a sequence dynamically, enabling it to capture long-range dependencies and contextual nuances effectively. Unlike recurrent neural networks (RNNs) or convolutional neural networks (CNNs), transformers process entire sequences of input data simultaneously in parallel, leading to better efficiency and performance.

Pre-Training and Fine-Tuning:

Central to the effectiveness of transformer architectures is the process of pre-training on a vast corpus of text data followed by fine-tuning on specific tasks or domains. During pre-training, the model learns general language patterns and semantics, acquiring a broad understanding of linguistic structures. Fine-tuning allows the model to adapt its knowledge and capabilities to the nuances of particular tasks or domains, ensuring optimal performance in real-world applications.

Attention Mechanisms:

Attention mechanisms play a crucial role in determining which parts of the input sequence the model should focus on when generating outputs. Through attention mechanisms, transformers can assign varying degrees of importance to different words or tokens, enabling them to capture and integrate contextual information effectively. This attention-based approach not only enhances the ability to understand and generate text but also enables the learning of hierarchical representations of language.